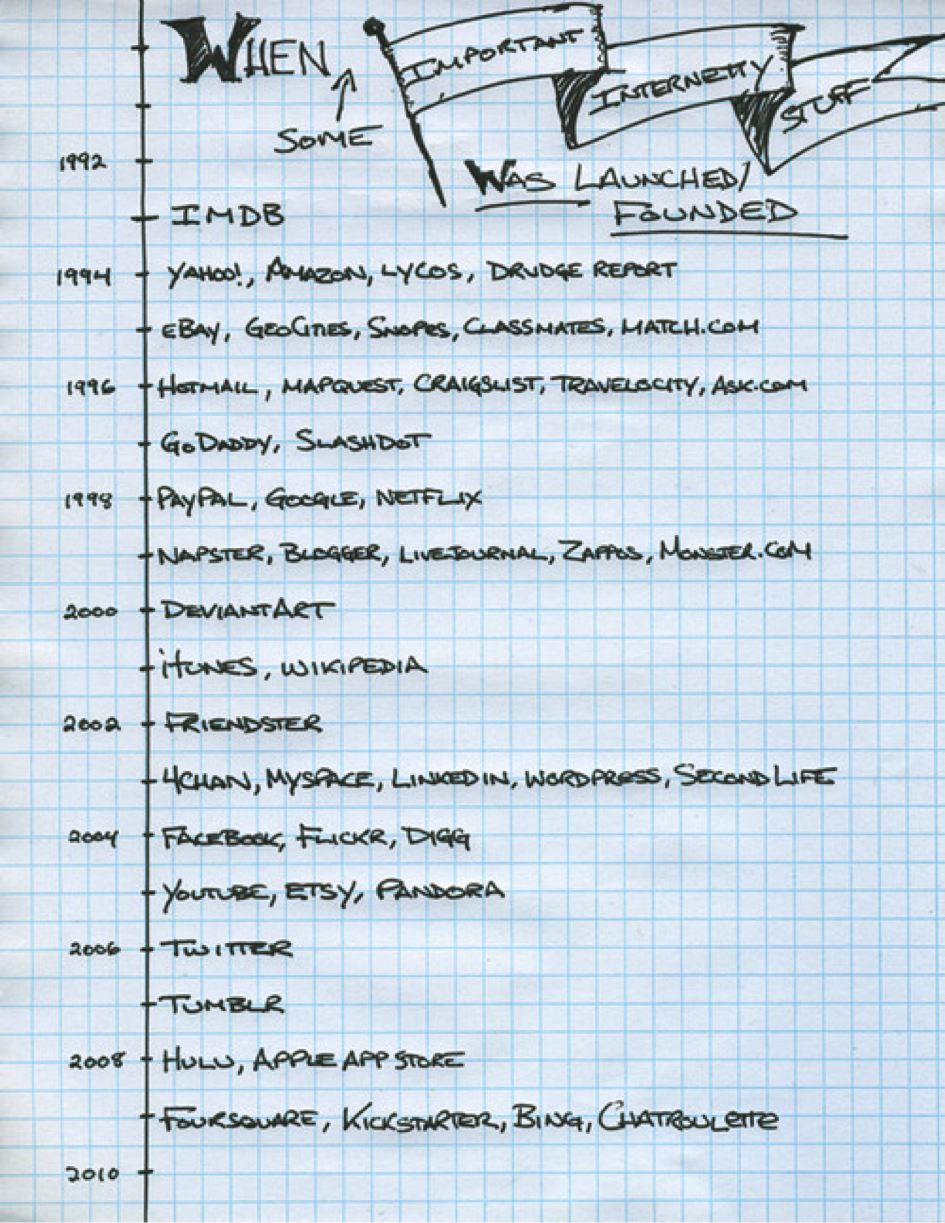

Since my time at Microsoft (almost 20 years ago!), I have been infected by the digital identity virus. At one moment I was even part of the WEF Personal Identity workgroup. Since then, I followed the identity space in some depth, some years more than others. See also my post on The Cambrian Explosion of Identity from 2019 that also figures David Birch.

Earlier this week, that same David Birch published a very interesting post about digital identity: the bottom line of his insight is that we should be less interested in solving pre-digital conceptions of identity and more in (certified) credentials.

I recently came to a similar conclusion, but from a completely different angle.

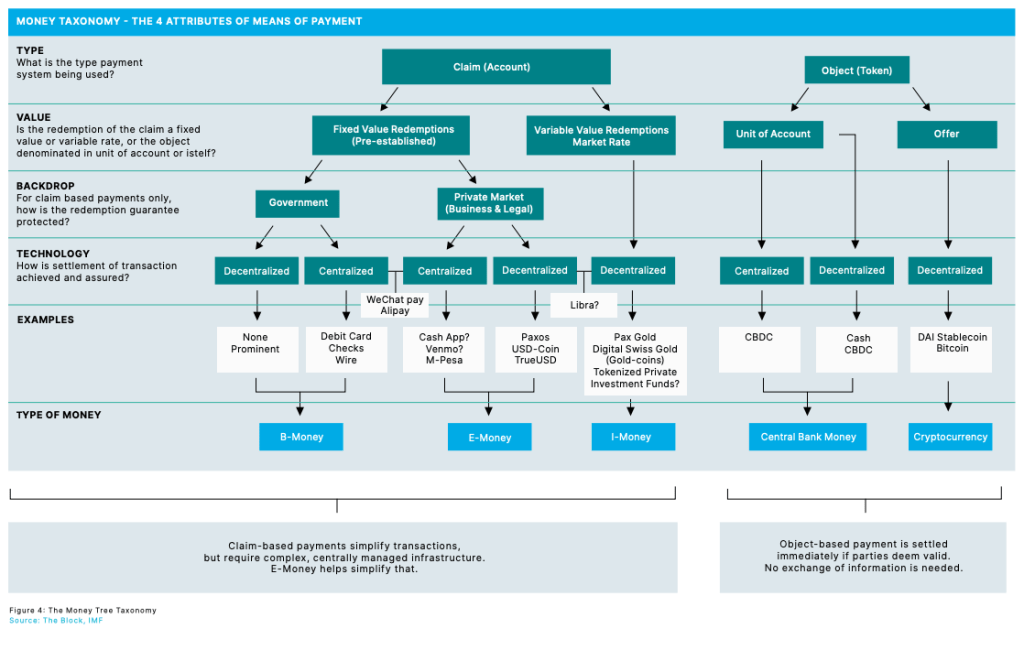

First, I bumped into The Block Whitepaper on CBDCs (Central Bank Digital Currencies) from August 2020, and got intrigued by the schema on page 13:

The authors do a great job in explaining the difference between Claim-based (or account-based) money and Object-based (or token-based) money.

In other words, money is an asset, and can be represented as a Claim (Account) or as an Object (Token)

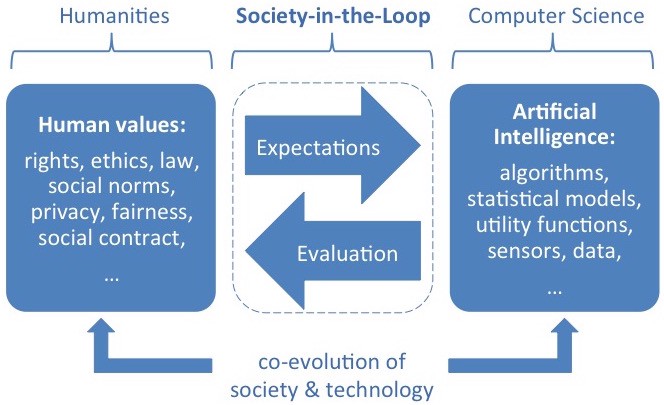

Then I ran into this May 2021 post by Andrew Hong on The Composability of Identity across Web 2.0 and Web 3.0. It’s a quite technical paper, and I probably only understand 5% of it, but my attention was again drawn to a diagram on the composable and non-composable aspects of identity:

Andrew Hong writes: “The second layer (and onwards) highlights categories and products that allow us to represent that transaction data and/or social graph as tokens. Since tokens have the qualities of existence, flexibility, and reusability – then by the transitive property – our digital identity now does as well. I can move around these tokens at will to different accounts and in different combinations.”

I suddenly realized that the difference between Account-based money and Token-based money also applied to identity.

In other words, just like money, also identity is an asset (it always was), and it can be represented as a Claim (Account) or as an Object (Token)

During my 2003 Microsoft project, I was lucky enough to be exposed to more advanced identity thinking by wise people like Kim Cameron and the other folks from The Internet Identity Workshop gang, and I got quite familiar with their thinking of certified identity claims or claims-based identity.

But only now, I realized that both Money and Identity can be account- or token-based, and that token-based is probably what’s going to help us make progress, because it makes identity and money programmable.

In other words:

For Account-based identity, you need to be sure of the identity of the account holder (the User ID / Password of your Facebook-account, your company-network, etc.). For Token-based identity (Certified claim about your age for example) you need a certified claim about an attribute of that identity.

- For a paper/plastic ID Card/passport, it is the signature of yourself and the signature of the issuing authority, and plenty of other technical ways of ensuring the integrity of the card of passport (holograms, etc.). But it is a certified claim that the ID Card/passport is real, authentic, not tampered with.

- In the case of an electronic ID Card (like the one we have in Belgium), the certified “token” for authentication or for digital signature is stored on the microchip of the ID card, and can be enabled by the PIN-code of the user (a bit like User ID / Password)

- For a certified (identity) claim (like proving that you are older than 18 for example), you basically need a signed token, a signed attribute. And because it is done digitally, (identity) attributes becomes programmable, you can assign it access and usage rights

For Account-based money, you need to be sure of the identity of the account owner (the User ID / Password or other mechanism to access your account). For Token -based money (a 100€ bill, an NFT token, a ETH token, etc.) you need a certified claim about an attribute of that money.

- For a 100€ bill it is the signature of the President of the ECB (European Central Bank) and plenty of other technical ways of ensuring the integrity of the paper bill (holograms etc.). But it is a certified claim that the 100€ bill is real, authentic, not tampered with.

- For digital money, it is a signed and encrypted token representing one or more certified aspects of that money. And because it is done digitally, money becomes programmable, you can assign it access and usage rights, just like you could do for identity aspects

So, I come – although from a different angle – to the same (or at least similar) conclusion as David Birch in his latest post about identity that (certified) credentials are the way forward.

“These credentials would attest to my ability to do something: they would prove that I am entitled to do something (see a doctor, drink in the pub, read about people who a richer than me), not who I am.” (David Birch)

I am just adding the money dimension to it, and using the same sentence, I can now say:

“These credentials would attest the money’s ability to do something: they would prove that the money is entitled to do something (pay for Starbucks, pay for food, only to be used if there is enough money on my account, etc.), not whose money it is. (Petervan)

Post Scriptum:

You could also consider NFTs as certified claims of something (in today’s hype, they are certified claims of the authenticity of an artwork, but it could be anything, also identity, or also money. Amber Case for example referred to NFTs in the context when mentioning the Unlock Protocol on her ongoing overview of micropayments and web-monetization:

Unlock Protocol has a particularly inventive approach to NFTs — using them as customizable membership keys for certain sites. This allows people to set a length of time for the membership, or access to certain features like private Discord channels.

Content creators can place paywalls and membership zones in the form of “locks” on their sites, which are essentially access lists keeping track of who can view the content. The locks are owned by the content owners, while the membership keys are owned by site visitors.

In that sense we can really look at certified credentials as key to open something (a door, a website, a resource) or to enable something (a certain action, a certain right, a certain flow, etc).

Rabbit hole? Curious to read your thoughts.

Warmest,